FPGA's are powerful tools in most every high-tech industry, including test and measurement. In the last decade, the presence of application specific FPGA code in testing applications as gone from rare to common. The task of coding can be very complicated. Using graphical approaches like LabVIEW FPGA (LVFPGA) help reduce the complexity of implementation allowing developers to focus on algorithms.

What Is LabVIEW FPGA

LabVIEW FPGA is a graphical design environment that lets engineers program FPGAs without writing low-level HDL code. It streamlines development by focusing on algorithms and hardware behavior rather than gate-level logic, making it ideal for rapid prototyping and complex test or measurement systems.

This abstraction away from the low-level implementation can help development occur at a higher level. But what happens when things start to break-down in the implementation process? What happens when the logic that is required for the application will not fit on the FPGA device in the system? What happens when it fits but doesn't meet timing?

Functionality can be reduced but this is a less than ideal solution and not even an option typically. These systems are modular so more hardware could always be added, but that is a non-elegant brute force approach that is typically not economically feasible on the project. That leaves the developer with the need to optimize the code to meet resource and timing requirements. Optimized code tends to be less high level and more complicated to follow and debug.

The Need to Optimize FPGA Code for Timing and Resources

Through our years and countless LVFPGA projects we have found areas where small tweaks can have huge benefits in compilation success. In this blog we will discuss one of those techniques, optimizing the reset.

When I was learning to program FPGAs, I was taught how to count and control the amount of logic gates and other hard blocks like DSPs and memories. But these elements must be connected to one another for data to move through the algorithm. This is handled by routing resources. I was taught you will never run out of routing resources, however, you can run out of "convenient" routing resources. When these convenient ones are gone, the compiler will start creating routes on less ideal paths. It is not as straightforward of a process as this but this works as a good way to visualize it. So, if you are missing timing, chances are the routing is getting clogged and paths are getting long.

Sometimes in LVFPGA it is hard to know what the code you write will look like in terms of routing usage. There is a lot of cool technology under the hood that is converting your diagrams into bitfiles. This process is not perfect and certain things are happening that you might not be aware of that can prove detrimental to your compile success.

How to Optimize FPGA Reset in LabVIEW

Two of the biggest items that can hurt timing are reset logic and the enable chain. I want to take a closer look at the reset logic because this is a place where some very simple changes can have big benefits. Reset logic allows the user interface to return the FPGA back to a known state programmatically. The logic this requires on the FPGA causes a large fanout of a reset line throughout the code. This fanout it what is hurting timing. So how do we fix it? The first thing you need to determine is if programmatic reset is important. Will your application be OK just re-downloading the bitfile? Re-downloading a bitfile achieves the exact same goal, just is slightly (in milliseconds) slower than doing a reset.

If you are OK with the re-download as reset method then you can modify certain elements to greatly improve timing performance.

Adjusting Feedback Nodes and ‘First Call?’ Properties

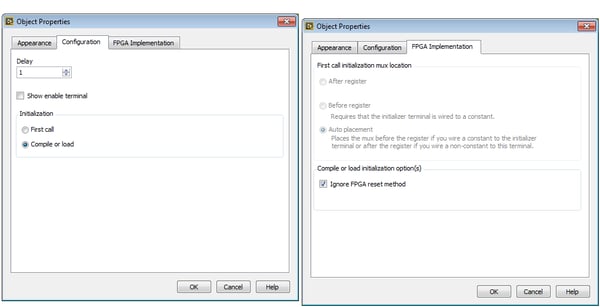

The two items that can be easily changed are 'First Call?' and feedback nodes. By default feedback nodes are configured to reset on "First Call". First call means after first being downloaded and the first clock after software reset. It is this first clock after reset that causes issues. Use the object properties to change this to compile or load. In addition, you will need to check the "Ignore FPGA reset method" on the FPGA Implementation tab. What this means is that your bitfile will have a default value for the register but there will be no method available to return it back to a default value without re-downloading the bitfile.

Managing Simulation and Programmatic Reset Differences

It is important to understand what consequences those settings have. The obvious one is programmatic reset will not behave like it did before. Perhaps more important is the fact that desktop simulations will also not work like they did before. When FPGA code is open on the desktop to be run, for debug purposes, that opening of the code is synonymous with loading a bitfile to the FPGA. All registers are put into their expected default value. The first time you run the VI it will operate with correct initial values. Additional runs (without closing and reopening the VI) will perform a programmatic reset on the feedback nodes. In the previous step we removed that capability. This leaves an annoying requirement of having to close and reopen VIs constantly.

Creating Wrappers for Feedback Nodes

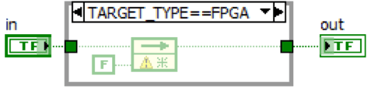

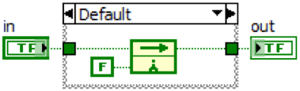

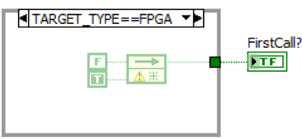

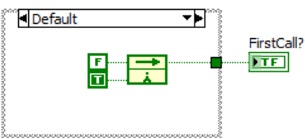

The solution is to create wrappers around the feedback nodes. This doesn't have to happen for all feedback nodes, just ones in which its value on the first clock is important. The wrappers use the conditional disable structure to operate differently on the PC vs the FPGA.

While not as prevalent as feedback nodes, first calls also have added reset logic that can easily be removed. The technique is identical to that used for feedback nodes. This can be seen in the following images.

Build a Reusable Utility Library

I suggest building a utility library of common feedback nodes and the first call register. You can move this library from project to project and not have to rebuild every time. Then get in the habit of using these VI wrappers instead of the feedback nodes themselves. I would recommend using the normal feedback nodes for any register where first clock values are not important. But still even with those, configure them to ignore reset. Be able to look over your code and scan for feedback nodes that do not have the yellow warning sign (which means reset is removed).

You will find that doing these simple steps will make a large improvement in timing performance. Especially with large applications or in clocks that run at a high rate.

Talk to Averna’s Engineering Experts

From optimizing FPGA reset logic to designing complete test systems, Averna’s engineers bring hands-on expertise in LabVIEW FPGA and real-world deployment. Our teams help you overcome performance challenges so your system runs efficiently. Reach out to our engineering experts to see how we can support your next project.

– Andy Brown, Principal Systems Engineer at Averna