The intricacies and high speeds of Machine Vision require great power. But as we know, with great power comes great responsibility. Vision is becoming more common in production and assembly for applications like product verification, identification, measurement, traceability, classification and defect tasks. This is due to the incredible speed and precision that a vision system delivers, from finding the tiniest flaw in a field of haystacks, to the consistency of placing a logo on the exact right spot on countless products. Plus, it offers the incredible benefit of removing tedious tasks from valuable employees, allowing them to put their smarts elsewhere.

Incorporating Machine Vision into a production line can bring huge payoffs, but the key to innovation is determining how a technology can be used to its maximum potential. Alas, as with everything else in the world, the best place to start is at the beginning.

Define the Machine Vision Application

Why did you get on the Vision train in the first place…what are you trying to accomplish? Is this for feature & defect detection? Measurement & identification? Active Alignment or positioning? Analysis & Calibration? Guided manufacturing? Any of these applications are a perfect fit for machine vision, but they will each be managed differently. In some cases, adding a field-programmable gate array (FPGA) is a straight forward solution to increase speed and accuracy in a machine vision solution. In other cases, it is less appropriate. Let’s figure this out.

What is an FPGA Anyhow?

Great question. An FPGA is a group of electronic circuits embedded on a chip and then configured by the end-user after manufacturing. It’s used to build complicated logic circuits with flexibility to avoid dedicating it to a specific application. It can be reconfigured to change its purpose, as opposed to a system that is set in stone. Basically, with enough logic blocks, an FPGA can be designed to do anything it needs to do. Among other things, FPGA's are commonly used for:

- Signal processing.

- Visual analysis or enhancements.

- Main processor acceleration.

- Application simulation.

Applying FPGAs to Machine Vision

There’s a variety of hardware and software tools available for FPGA, and National Instruments has even developed a vision-specific development module to support the application. Some tasks that are performed by FPGAs are an excellent fit for Machine Vision, and others not so much. When they do fit, they fit just right. There are 2 ways to integrate an FPGA into your system:

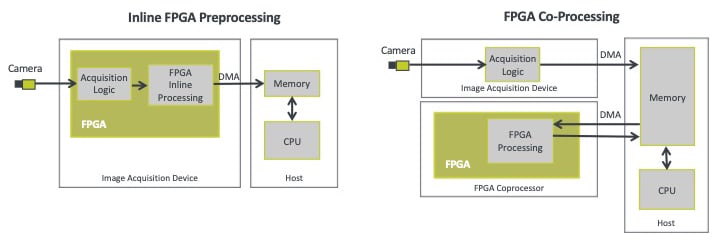

1. Inline Architecture

FPGA Inline architecture is ideal for quality verification because they can methodically scan pixels, very quickly. Adding one or more FPGAs inline with your architecture involves connecting the camera directly to the pins of the FPGA, allowing the pixels of the image to pass through directly. By doing this, the FPGA performs pre-processing tasks which are then offloaded from the CPU, increasing overall system throughput. The amount of data that the CPU will have to process is reduced, because the FPGA has already narrowed down the area of interest from the image. It also allows high-speed control operations to be processed directly within the FPGA, again without using the CPU, speeding up the entire algorithm.

2. Co-Processing

As opposed to an inline architecture where one runs after the other, co-processing architecture means the CPU and the FPGA are working together, sharing the brunt of the work. The CPU acquires the image for processing and sends it over to the FPGA. Tasks like filtering, color space conversion or binary morphology (just a few examples) can be performed without the use of the CPU. If all the tasks can be performed by the FPGA, then only the results are sent back to the processor. If the application includes more complicated tasks, the CPU will take care of those. Either way, this offloads a lot of the work from the processor, allowing the system to run much faster.

When is it Time to Embrace an FPGA?

The greatest benefit an FPGA can offer such an environment is the ability to perform multiple tasks in parallel as opposed to simultaneously. This is the big difference between an FPGA and a CPU and can be a game changer. A CPU needs to complete one operation before starting the next, so consider the difference here. Hypothetically, let’s say you are running an algorithm that needs to perform 5 operations. Each operation takes 5 ms. If one runs after another using a CPU, that is a total of 25 ms. If they are all run at the same time, adding a ms or two to process the first pixel, the math speaks for itself. Run time is cut by more than half.

An example of an application that can really benefit from this is scanning for defects. If there is a larger surface area and it needs to be verified for dents, scratches, dirt, etc…by running it through an FPGA it can verify colour, shape, shading, edges, lines, and corners, simultaneously. The same cannot be said for a CPU alone.

This is one of many examples where an FPGA is the right tool to optimize a vision system. Understandably, there is often a hesitation to implement an additional layer of complexity to an already complicated system. But in the right scenario an FPGA might be exactly what’s needed to get a vision system running to its maximum potential.

If you would like to learn more about FPGAs, there are great resources on the National Instruments’ website.

To determine if an FPGA is right for your vision system, please contact Averna to speak with a Machine Vision expert.

Want to learn more about Machine Vision?

Get in touch with our experts or navigate through our resource center.

Read our white paper for more information on the benefits of implementing Machine Vision in your production line.